-

Type:

Bug

-

Resolution: Duplicate

-

Priority:

Major - P3

-

None

-

Affects Version/s: 2.8.0-rc0

-

Component/s: Storage

-

Fully Compatible

-

ALL

-

None

-

3

-

None

-

None

-

None

-

None

-

None

-

None

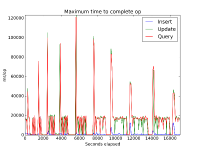

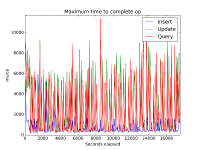

Comparing MMAP to WiredTiger on an 8 core server with 32GB Ram and local SSD (AWS Instance).

Throwing a mix of insert/update/query at the server and wiredtiger viewed in mongostat shows really inconsistent performance - also many operations in log are poking about the 100ms mark with inserts taking >1s in some cases. I dont see this inconsistency with mmap v1.

mmapv1 engine output from mongostat looks like this.

insert query update delete getmore command flushes mapped vsize res faults locked db idx miss % qr|qw ar|aw netIn netOut conn time 3076 8761 5582 *0 0 2|0 0 6.0g 12.5g 415.0m 0 :0.0% 0 37|85 42|86 10m 9m 129 15:33:16 2789 10304 5979 *0 0 1|0 0 6.0g 12.5g 418.0m 0 :0.0% 0 42|82 44|83 11m 11m 129 15:33:17 3668 8509 4778 *0 0 1|0 0 6.0g 12.5g 412.0m 0 :0.0% 0 10|65 39|67 10m 9m 129 15:33:18 4151 8757 5172 *0 0 1|0 0 6.0g 12.5g 472.0m 0 :0.0% 0 29|94 32|95 11m 9m 129 15:33:19 3019 7988 6096 *0 0 1|0 0 6.0g 12.5g 477.0m 0 :0.0% 0 44|78 48|79 11m 9m 129 15:33:20 3661 7152 5647 *0 0 2|0 0 6.0g 12.5g 478.0m 0 :0.0% 0 53|75 56|75 11m 8m 129 15:33:21 2378 7132 6574 *0 0 1|0 0 6.0g 12.5g 479.0m 0 :0.0% 0 32|91 35|92 11m 8m 129 15:33:22 2437 8355 6379 *0 0 1|0 0 6.0g 12.5g 484.0m 0 :0.0% 0 41|83 43|84 11m 9m 129 15:33:23 2334 8889 5756 *0 0 1|0 0 6.0g 12.5g 483.0m 0 :0.0% 0 52|72 54|73 10m 9m 129 15:33:24

WT looks like this

insert query update delete getmore command flushes mapped vsize res faults locked db idx miss % qr|qw ar|aw netIn netOut conn time

2348 8786 5922 *0 0 2|0 n/a n/a n/a n/a n/a n/a n/a n/a n/a 10m 9m 129 15:35:48

9918 30670 21542 *0 0 1|0 n/a n/a n/a n/a n/a n/a n/a n/a n/a 39m 34m 129 15:35:49

11902 33152 20733 *0 0 1|0 n/a n/a n/a n/a n/a n/a n/a n/a n/a 41m 37m 129 15:35:50

3801 8540 5223 *0 0 1|0 n/a n/a n/a n/a n/a n/a n/a n/a n/a 11m 9m 129 15:35:51

*0 *0 *0 *0 0 1|0 n/a n/a n/a n/a n/a n/a n/a n/a n/a 79b 13k 129 15:35:52

2934 7013 5251 *0 0 2|0 n/a n/a n/a n/a n/a n/a n/a n/a n/a 10m 8m 129 15:35:53

548 1183 896 *0 0 1|0 n/a n/a n/a n/a n/a n/a n/a n/a n/a 1m 1m 129 15:35:54

52 91 77 *0 0 1|0 n/a n/a n/a n/a n/a n/a n/a n/a n/a 159k 118k 129 15:35:55

5622 14617 12105 *0 0 1|0 n/a n/a n/a n/a n/a n/a n/a n/a n/a 22m 16m 129 15:35:56

- is duplicated by

-

SERVER-16479 Pauses due to large page eviction during fetches under WiredTiger

-

- Closed

-

-

SERVER-16492 thread gets stuck doing update for a long time

-

- Closed

-

-

SERVER-16566 long pause during bulk insert due to time spend in __ckpt_server & __evict_server with wiredTiger

-

- Closed

-

- is related to

-

SERVER-16619 Very long pauses using 2.8.0-rc3 when running YCSB workload B.

-

- Closed

-

- related to

-

SERVER-16572 Add counter for calls to sched_yield in WiredTiger

-

- Closed

-

-

SERVER-16602 Review WiredTiger default settings for engine and collections

-

- Closed

-

-

SERVER-16643 extend existing THP warning to THP defrag setting

-

- Closed

-