-

Type:

Bug

-

Resolution: Done

-

Priority:

Major - P3

-

None

-

Affects Version/s: 2.8.0-rc5

-

Component/s: Storage, WiredTiger

-

ALL

-

None

-

None

-

None

-

None

-

None

-

None

-

None

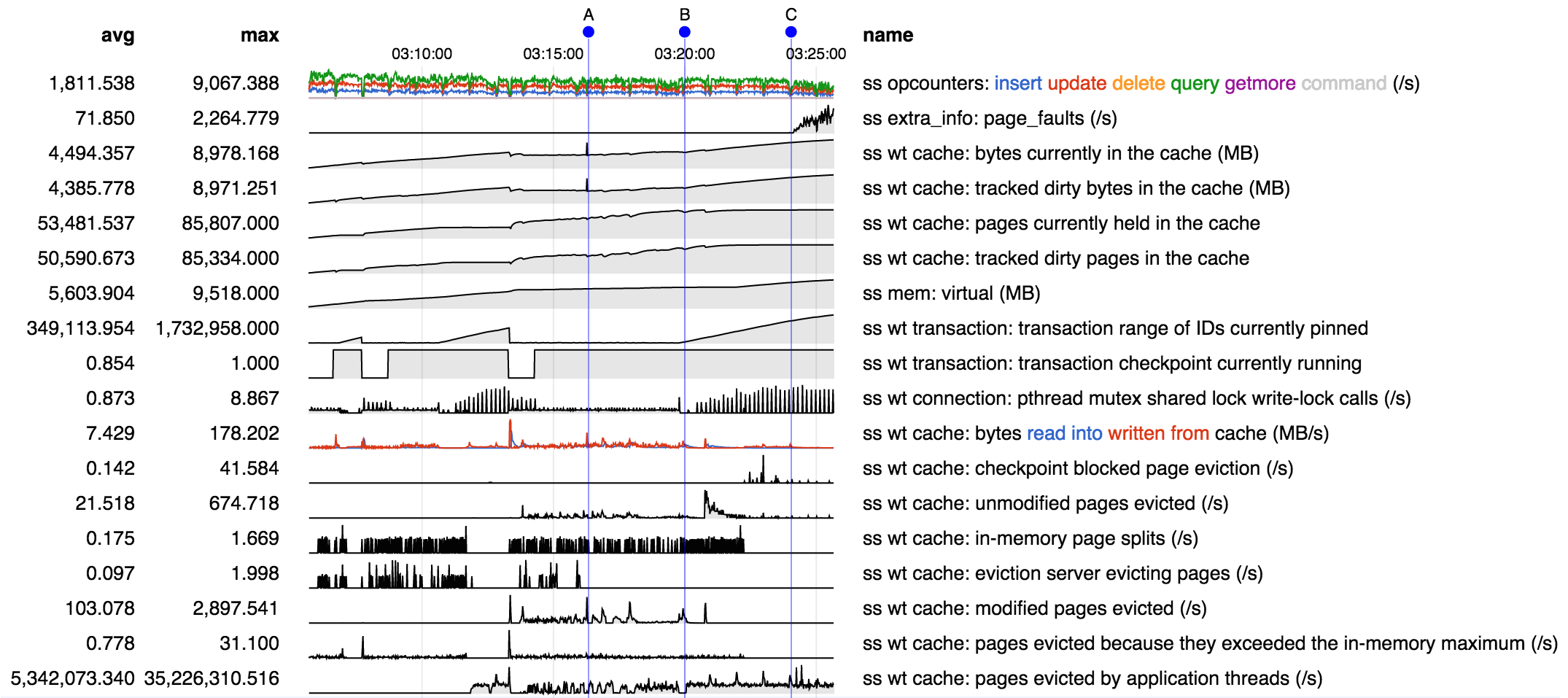

During a 20-minute run with heavy mixed workload the cache was observed to grow on occasion (2 runs out of about 30) to almost 9 GB, compared to a configured value of 5 GB. An assortment of potentially relevant metrics:

- at point B the checkpoint enters the second phase where "range of ids pinned" grows.

- at this point bytes in cache starts growing, and continues growing, apparently indefinitely.

- at C we begin to page performance declines correspondingly.

- if run continued beyond 20 minutes growth would have likely resulted on being killed by OOM killer.

- checkpoint is running longer than it did in runs that didn't experience the issue - typically that third checkpoint completed before the end of the run.

- the spike in reported cached bytes at A, without corresponding increase in VM, is probably the apparent accounting error from

SERVER-16881, which is seen to occur frequently in these runs. However the increase starting at B that is the subject of this ticket is accompanied by an increase in VM, so is likely an unrelated issue.

- is related to

-

SERVER-16997 wiredtiger's "bytes currently in the cache" exceeds "maximum bytes configured" and eviction threads are deadlocked

-

- Closed

-

- related to

-

SERVER-16977 Memory increase trend when running hammar.mongo with WT

-

- Closed

-