-

Type:

Bug

-

Resolution: Done

-

Priority:

Major - P3

-

None

-

Affects Version/s: 3.1.3

-

Component/s: WiredTiger

-

None

-

Fully Compatible

-

ALL

-

None

-

None

-

None

-

None

-

None

-

None

-

None

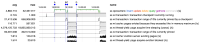

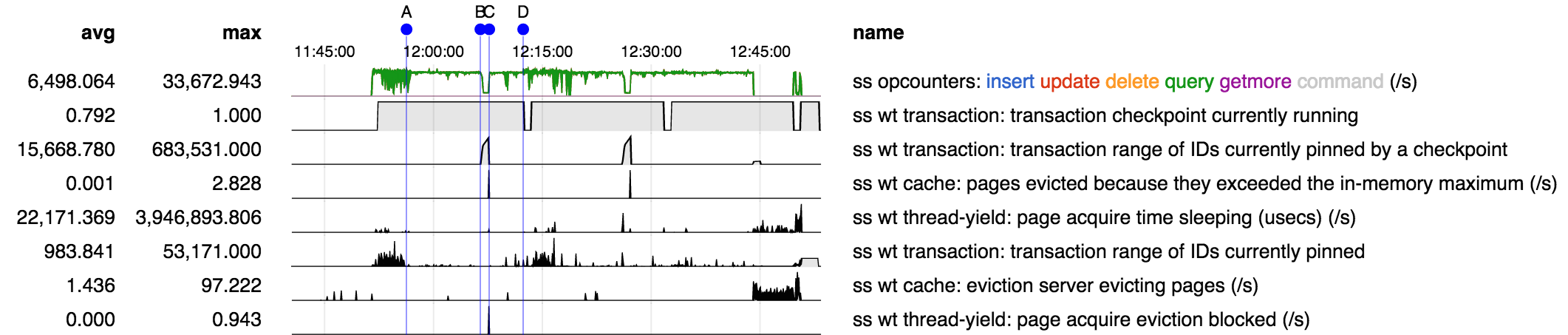

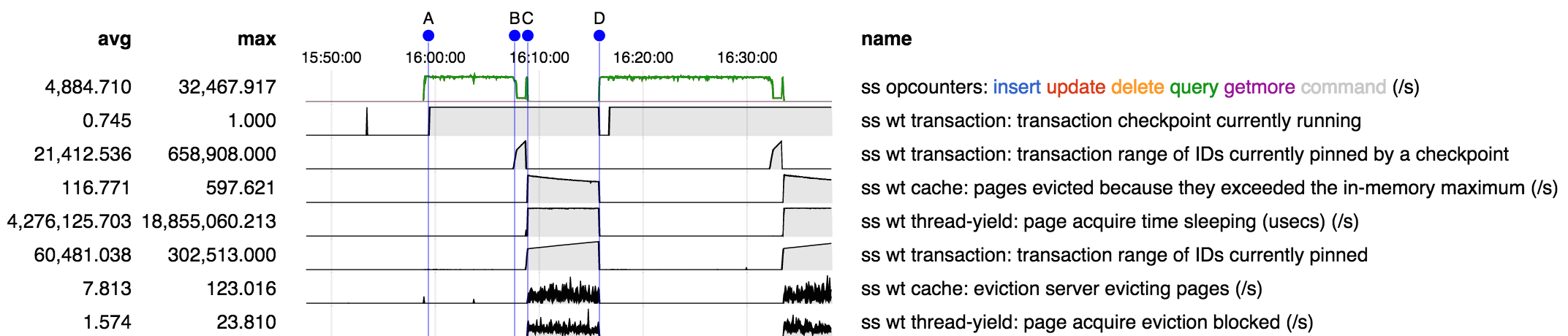

- 132 GB memory, 32 processors, slowish SSDs, 20 GB WT cache

- YCSB 10 fields/doc, 20M docs, 50/50 workload (zipfian distribution), 20 threads

- data set size is ~23 GB but cache usage can grow to ~2x that and storage size can grow to ~3x, believe due to random update workload

- to avoid

SERVER-18314used either of the following (with same results)- ext4 mounted with -o commit=10000000

- xfs

3.1.3-pre (f5450e9bc5cf63c3dc4d9cb416713b0f6970e6d4)

3.1.3 (51ad8eff0d50387f0565d23a15e7ba1db0ea962c)

3.1.4-pre (36ac7a5d8a6cc4f6280f90ce743ab05a77a541a8)

Notes:

- issue for this ticket is period from C-D; B-C appears to be different issue.

- appears to be a regression in 3.1.3

- same result with xfs and ext4 -o commit=largenumber

- superficially similar to

SERVER-18314in that it coincides with an fdatasync but:- used settings that were shown to eliminate

SERVER-18314issue - writes did not seem to be blocked as in

SERVER-18314 - only affected one of the two periods where fadatasync runs

- so appears to be WT issue, not platform issue

- used settings that were shown to eliminate

- attempted to get stack traces but it appears process is not interruptible during the fdatasync and this issue coincides with that

- is related to

-

SERVER-18314 Stall during fdatasync phase of checkpoints under WiredTiger and EXT4

-

- Closed

-

- related to

-

SERVER-18875 Oplog performance on WT degrades over time after accumulation of deleted items

-

- Closed

-

-

SERVER-18677 Throughput drop during transaction pinned phase of checkpoints under WiredTiger (larger data set)

-

- Closed

-

-

SERVER-18829 Cache usage exceeds configured maximum during index builds under WiredTiger

-

- Closed

-

-

WT-1907 Speed up transaction-refresh

-

- Closed

-