-

Type:

Bug

-

Resolution: Done

-

Priority:

Critical - P2

-

Affects Version/s: 3.0.3, 3.0.4

-

Component/s: Performance, WiredTiger

-

Fully Compatible

-

ALL

-

Quint Iteration 7, QuInt A (10/12/15), QuInt C (11/23/15), Repl 2016-08-29, Repl 2016-09-19, Repl 2016-10-10, Repl 2016-10-31

-

None

-

None

-

None

-

None

-

None

-

None

-

None

- hardware: 24 CPUs, 64 GB memory, SSD (all mongods and clients on same machine)

- start 3-member replica set with following options:

mongod --oplogSize 50 --storageEngine wiredTiger --nojournal --replSet ...

Note: repros with journal also, ran without journal to rule out that as a cause.

- Simple small document insert workload: 5-16 threads (number doesn't matter to the repro) each inserting small documents {_id:..., x:0} in batches of 10k

Replica lag grows unbounded as secondaries process ops at maybe 50-80% the rate of the primary.

Some stats of note:

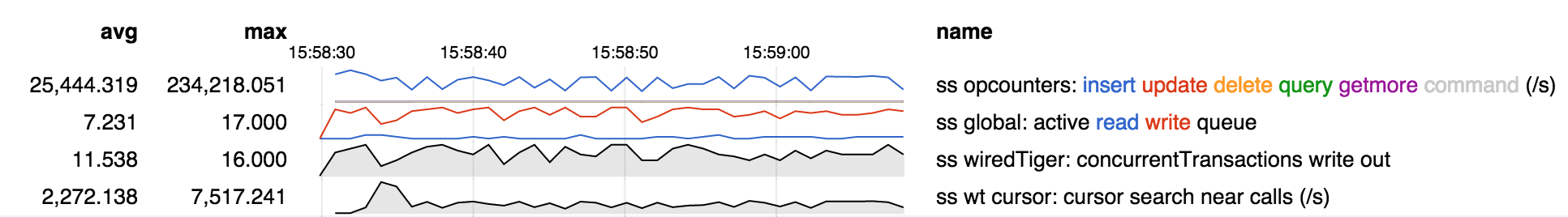

primary

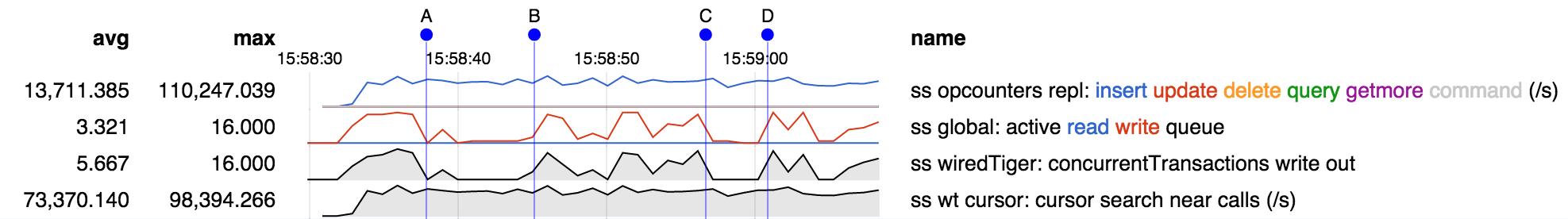

secondary

- op rate on secondary is maybe half that on primary

- ops in flight (i.e. active queues) is much less even on the secondary, although that isn't reflected in the reported op rates

- secondary is executing far more search near calls, about one per document, vs what appears to be about one every 100 documents on primary

Will get stack traces.

- depends on

-

SERVER-19122 Out-of-order _id index inserts on secondary contribute to replica lag under WiredTiger

-

- Closed

-

-

WT-1989 Improvements to log slot freeing to improve thread scalability

-

- Closed

-

-

SERVER-18983 Process oplog inserts, and applying, on the secondary in parallel

-

- Closed

-

-

SERVER-15344 Make replWriter thread pool size tunable

-

- Closed

-

-

SERVER-18982 Apply replicated inserts as inserts

-

- Closed

-

- is duplicated by

-

SERVER-21444 WiredTiger replica set member is getting behind while syncing the oplog during the initialSync

-

- Closed

-

- related to

-

SERVER-19668 Waiting time for write concern increase as time goes by

-

- Closed

-

-

SERVER-21858 A high throughput update workload in a replica set can cause starvation of secondary reads

-

- Closed

-