-

Type:

Bug

-

Resolution: Done

-

Priority:

Major - P3

-

Affects Version/s: 3.0.5

-

Component/s: Concurrency, WiredTiger

-

None

-

Fully Compatible

-

ALL

-

QuInt 8 08/28/15

- single collection, 100k documents (fits in memory)

- 12 cpus (6 cores)

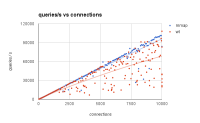

- workload is n connections each querying a random single document in a loop by _id

- measured raw flat-out maximum capacity by using 150 connections each doing queries as fast as possible; similar numbers for WT (174k queries/s) and mmapv1 (204k queries/s)

- then measured simulated customer app by introducing delay in loop so that each connection executes 10 queries/s, and then ramped number of connections up to 10k, for an expected throughput of 10 queries/connection/s * 10k connections = 100k queries/s. This is well below (about half) the measured maximum raw capacity for both WT and mmapv1, so expect to be able to achieve close to 100k queries/s at 10k connections

- do achieve close to that for mmapv1 (75k queries/s), but only get about 25k queries/s for WT at 10k connections, and behavior is erratic

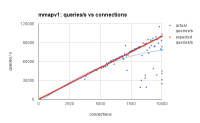

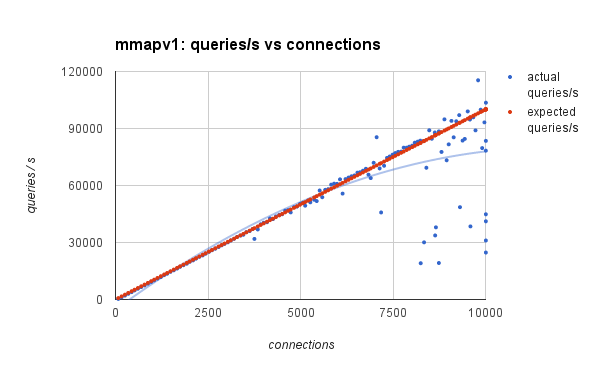

mmapv1

- max raw capacity is 204k queries/s (as described above, this is with 150 connections each issuing queries as fast as possible)

- as connections are rampled up to 10k connections, this time with each connection issuing only 10 queries/s, throughput behavior is excellent up to about 6k connections, some mild falloff above that

- at 10k connections getting about 75k queries/s (estimated by fitting the blue quadratic trendline), not too far below the expected 100k queries/s

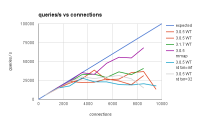

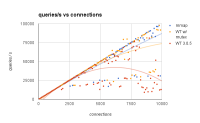

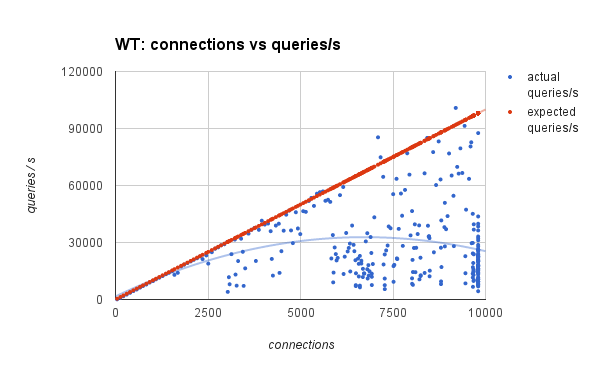

WiredTiger

- max raw capacity is similar to mmapv1 at 174k queries/s (as described above, this is with 150 connections each issuing queries as fast as possible)

- but as connections are rampled up to 10k connections, this time each connection issuing only 10 queries/s, above about 3k connections behavior becomes erratic

- at 10k connections getting only about 25k queries/s (estimated by fitting the blue quadratic trendline), far below the expected 100k queries/s

Repro code:

function repro_setup() {

x = []

for (var i=0; i<100; i++)

x.push(i)

count = 100000

every = 10000

for (var i=0; i<count; ) {

var bulk = db.c.initializeUnorderedBulkOp();

for (var j=0; j<every; j++, i++)

bulk.insert({})

bulk.execute();

print(i)

}

}

function conns() {

return db.serverStatus().connections.current

}

function repro(threads_query) {

start_conns = conns()

while (conns() < start_conns+threads_query) {

ops_query = [{

op: "query",

ns: "test.c",

query: {_id: {"#RAND_INT": [0, 10000]}},

delay: NumberInt(100 + Math.random()*10-5)

}]

res = benchStart({

ops: ops_query,

seconds: seconds,

parallel: 10,

})

sleep(100)

}

}

- is related to

-

SERVER-20167 Avoid spinlock in TimerStats

-

- Closed

-