-

Type:

Bug

-

Resolution: Done

-

Priority:

Major - P3

-

Affects Version/s: None

-

Component/s: WiredTiger

-

Fully Compatible

-

ALL

-

Storage 2017-11-13

-

None

-

3

-

None

-

None

-

None

-

None

-

None

-

None

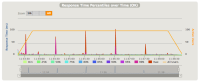

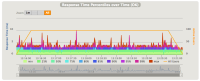

We are storing a huge number of collections (like thousands) in our databases. We are planning to migrate our Mongo storages from MMAPv1 to WiredTiger, but before doing that, we did a bunch of performance tests on MongoDB 3.2.7 and 3.2.8. We created test dataset with a large number of collections (30 000) and written a test, which performs only read by id operations. The results showed latency peaks (please see the attached screenshot 1). The test was executed on the following hardware configurations:

- replica set with 3 nodes deployed on Amazon EC2 with SSD drives using XFS file system

- one MongoDB instance run on MackBook Pro

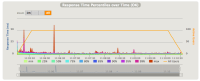

We observed similar performance characteristic for both configurations used. After reading the docs and tuning WiredTiger configuration, we discovered, that the peaks are probably caused by periodic flush memory to disk (fsync). We tried to set syncdelay option to zero (which is actually not recommended) and noticed that performance was better but peaks are still there (please see attached screenshot 2).

In order to reproduce the problem please use the attached zip file containing the following:

- Mongo shell script to easily load the test data

- minimal REST service, that makes calls to Mongo

- Gatling simulation, which makes calls to the REST service

Steps to reproduce:

1. Load test data with MongoDB shell script

2. Run REST service

3. Run Gatling simulation

4. Notice the recurring peaks on latency charts For more detailed instructions on how to run it, please see README.txt in the zip.

We also ran the above tests on Mongo 3.2.1. We conducted multiple tests both locally and on our machines on AWS and the performance is OK, there are no peaks. The results can be seen on screenshot 3.

- is related to

-

WT-2831 Skip creating a checkpoint if there have been no changes

-

- Closed

-