-

Type:

Bug

-

Resolution: Duplicate

-

Priority:

Major - P3

-

None

-

Affects Version/s: 3.6.0-rc8

-

Component/s: WiredTiger

-

ALL

-

Storage Non-NYC 2018-03-12

-

0

-

None

-

None

-

None

-

None

-

None

-

None

-

None

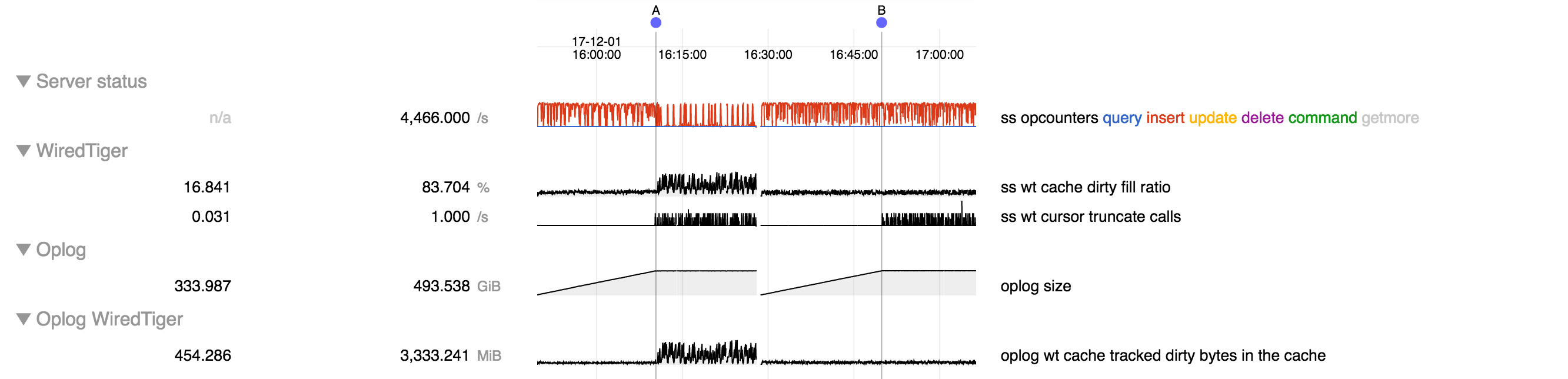

1-node repl set, HDD, 500 GB oplog, 4 GB cache, 5 threads inserting 130 kB docs. Left is 3.6.0-rc8, right is 3.4.10:

At the point A where the oplog fills and oplog truncation begins in 3.6.0-rc8 we see large amounts of oplog being read into cache and large amounts dirty data in cache and resulting operation stalls. This does not occur at the corresponding point B when running on 3.4.10.

FTDC data for the above two runs attached.

- depends on

-

WT-3805 Avoid reading lookaside pages in truncate fast path

-

- Closed

-

- is duplicated by

-

SERVER-32547 Mongo stalls while evicting dirty cache

-

- Closed

-

- related to

-

SERVER-32154 Unbounded lookaside table growth during insert workload

-

- Closed

-