-

Type:

Question

-

Resolution: Works as Designed

-

Priority:

Major - P3

-

None

-

Affects Version/s: 3.4.10, 3.4.14

-

Component/s: Performance, WiredTiger

-

None

-

3

-

None

-

None

-

None

-

None

-

None

-

None

Hi,

On a single server we're hosting 4 different mongod instances from two different sharded clusters:

- One primary from cluster1

- One arbiter from cluster1

- One secondary from cluster2

- One arbiter from cluster2

Server has following specs:

8 Cores

64GB RAM

4GB Swap

We have 20 servers with pretty much the same deployment.

One primary from one cluster.

One secondary from the other cluster

One arbiter from each clusters

We currently are facing issues with memory and swap consumption.

Usually the Primary is consuming most of the memory and most of the swap.

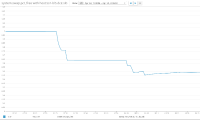

We recently restarted the secondary on a server and it appear the primary uses almost 40GB of RAM.

total used free shared buffers cached

Mem: 64347 40719 23627 452 5 2360

-/+ buffers/cache: 38353 25993

Swap: 4095 1419 2676

We tried to limit WTCacheSizeGB to 12GB per mongod few weeks ago but the issue still stands.

We tried to lower it to 10GB but we started to have slowness and perfomances issues so we went back up to 12GB.

USER PID %CPU %MEM VSZ RSS TTY STAT START TIME COMMAND mongodb 5088 0.2 0.0 1021216 33016 ? Ssl Mar29 46:20 /opt/mongo/clust-users-1-shard10-arb-2 --config /etc/mongodb.d/clust-users-1-shard10-arb-2.conf mongodb 24194 0.3 0.0 1025444 34460 ? Ssl Mar27 57:03 /opt/mongo/clust-users-2-shard9-arb-1 --config /etc/mongodb.d/clust-users-2-shard9-arb-1.conf mongodb 12199 85.4 33.0 23322576 21776176 ? Ssl 15:08 55:47 /opt/mongo/clust-users-2-shard6-1 --config /etc/mongodb.d/clust-users-2-shard6-1.conf mongodb 9296 156 55.5 43653800 36571228 ? Ssl Mar20 46232:29 /opt/mongo/clust-users-1-shard8-1 --config /etc/mongodb.d/clust-users-1-shard8-1.conf

It can be seen that clust-users-1-shard8-1 (primary) uses 55.5% of the memory.

clust-users-2-shard6-1 (secondary) uses already 33% of memory despite being restarted less than a day ago and not being a primary at any point.

We do not understand how and why a single instance can use more than half the total memory of the server despite having set the WiredTigerCacheSizeGB.

You'll find attached the configuration files from each instances including arbiters.

Thanks,

Benoit

- depends on

-

WT-4053 Add statistic for data handle size

-

- Closed

-