-

Type:

Bug

-

Resolution: Incomplete

-

Priority:

Major - P3

-

None

-

Affects Version/s: 3.4.4

-

Component/s: WiredTiger

-

None

-

ALL

Over the last couple of months we have seen the hot secondary on one of our replica sets have a memory spike and then get killed by the linux OOM_KILLER

*Setup: *

- Mongod Version: 3.4.4

- Replica Set Config

- 3 boxes:

- One primary and one hot secondary running with 8 core and ~64 GBs of memory with swap disabled

- Each box runs in Amazon EC2

- Each box has a 1500 GB file system mounted for its data directory

- Each box has a 50 GB file system mounted for the db logs

- One backup secondary with 4 cores and 32GBs of memory

The load on hot secondary is the load to keep in sync with the primary + some queries on 2 collections that exist in this replica set. These queries sometimes include table scans.

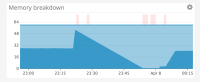

Every once in a while our hot secondary's memory usage will spike causing the oom_killer to kill the running mongod process. (see the mongo memory spike screenshot attached)

There are some other strange things going on with the mongo process running out of memory. One is that the WiredTiger cache size does not seem to increase during this time period. (See the cache usage image attached)

There also seems to be huge spike in the amount of data read from disk right before the mongod memory usage spikes - about 40GBs worth. (see the attached disk read spike). A weird thing here is that those disk reads are on the mounted file system which holds the mongo logs (and is located at `/`) and not the one that holds the mongo data.

I have noticed this issue: https://jira.mongodb.org/browse/SERVER-27909 which seems like it could be related?

I have also attached the diagnostic.data logs from during and after the incident below. I believe that the incident should be towards the end of metrics.2018-04-08T00-12-09Z-00000. Let me know if there is any other data you need me to provide. Any help would be greatly appreciated.