-

Type:

Bug

-

Resolution: Community Answered

-

Priority:

Major - P3

-

None

-

Affects Version/s: 3.6.16

-

Component/s: Sharding

-

None

-

ALL

-

Description

Periodically our MongoS (version 3.6.16) task executors experience intermittent increased latency while establishing new connections to MongoD. The latency occurs between the ASIOConnection::setup's call to strand()->dispatch() and the dispatch lambda being invoked. This can delay the call to NetworkInterfaceASIO::_connect for up to 20 seconds.

The latency seems to max out at 20 seconds, indicating it may involve the options.refreshTimeout which is also used as a timeout during connection establishment.

There is no external cause or correlation we can find. Stalls occur randomly / intermittent, and seem to occur during otherwise normal conditions.

Runtime Environment

We run MongoS in Kubernetes 1.15.12, Docker 18.6.1, kernel 5.4.15-1.el7.elrepo.x86_64.

Our Docker image for /usr/bin/mongos is built from "deb http://repo.mongodb.org/apt/ubuntu xenial/mongodb-org-unstable/3.6 multiverse" official MongoDB package mongodb-org-mongos:3.6.16 and the binary is unmodified.

Sequence of Events

The task executor stall and related side effects occur as follows:

- Queries arrive at our MongoS instance and call ClusterFind::runQuery which selects the next task executor and calls mongo::establishCursors.

- ConnectionPool::SpecficPool::getConnection(hostAndPort) is called.

- ConnectionPool::SpecificPool::spawnConnections is called, which sees the available connection pool is too small and constructs a new connection, then calls ASIOConnection::setup to establish the connection and add it to the ready pool.

- ASIOConnection::setup dispatches a callback to the ASIO strand with _impl->strand().dispatch() and a lambda callback {{[this, timeout, cb] { ... }}}

- Something occurs which delays invoking the dispatch lambda callback.

- We see this latency take up to 20 seconds maximum.

- This ASIOConnection::setup process repeats for each getConnection(host:port) pair on the stalled task executor.

- Maximum number of simultaneous "connecting" connections defaults to 2. If the getConnection were called on 6 distinct host:port endpoints we can see the number of "connecting" connections spike to 12. This increases the "refreshing" connection count in the pool stats.

- Queries queue up at the stalled task executor waiting for an available connection.

- Applications connected using the Java driver SRV-based cluster select among servers randomly. The stalled server's connection pool in the client becomes exhausted as all connections are waiting on stalled queries.

- Applications gradually begin opening additional connections to the server with the stalled task executor, attempting to increase throughput.

- Some fraction of these new queries will also become queued at the stalled task executor.

- The affected MongoS instance sees a gradual increase in client connections.

- Other task executors in the affected MongoS continue processing queries and maintain normal latencies.

- Only the stalled executor shows increased query latencies, since queries are not being executed and thus don't reach a MongoD instance for many seconds.

- Finally the dispatch lambda callbacks are invoked, which calls ASIOConnection::setTimeout and then initiates the connection process by calling _global->_impl->_connect(_impl.get()); at line 251.

- No connection-related errors are observed in the logs.

- Latency between _connect() and _setupCallback lambda calls appear normal (comparable to the steady state connect times of a few milliseconds). The latency only increases between strand()->dispatch() and _connect().

- Application clients observe increased latency for some random subset of queries executed on the stalled instance during the stall event.

Observations

Using bpftrace I've measured the latency between the following points inside connection_pool_asio.cpp - all line numbers are for the r3.6.16 version of the source:

- At the start of the ASIOConnection::setup method invoked at line 207.

- Immediately before the call to {{setTimeout(timeout, [this, access, generation] { ... }}} at line 242. This indicates our lambda has been invoked by strand().dispatch(..) and we are about to call _global->_impl->_connect(). Latency is delta from (1).

- At the start of execution of the _setupCallback lambda defined at line 209. This indicates the completion of the _connect operation. Latency is delta from (1).

In steady state we see the latency hovering around a few milliseconds. At certain times the task executor stalls for up to 20 seconds between points (1) and (2). We believe this may involve the refresh timeout (default 20 seconds) but have not yet been able to trace the root cause.

Attached is a bpftrace script and a log showing the latencies during a single query stall event.

For example, at 22:44:54.411 the first call to 1. ASIOConnection::setup() is made for connection handle 93874978094592. This takes 16.821 seconds to reach 2. call setTimeout() at 22:45:11.232 and 3. in _setupCallback about 7ms after that.

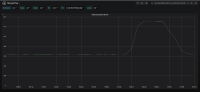

Graphs

**I've attached graphs showing the client connection spike associated with the task executor stall (setup-latency-client-connections.png) as well as a 3-hour and 48-hour graph showing the frequency over time.

Stalls occur with varying frequency depending on the volume of request traffic going to a particular MongoS instance. The probability of a stall increases with increasing traffic – rate of stalls is lower overnight and higher at peak traffic mid-day. Higher traffic tiers experience more frequent spikes than lower traffic tiers.