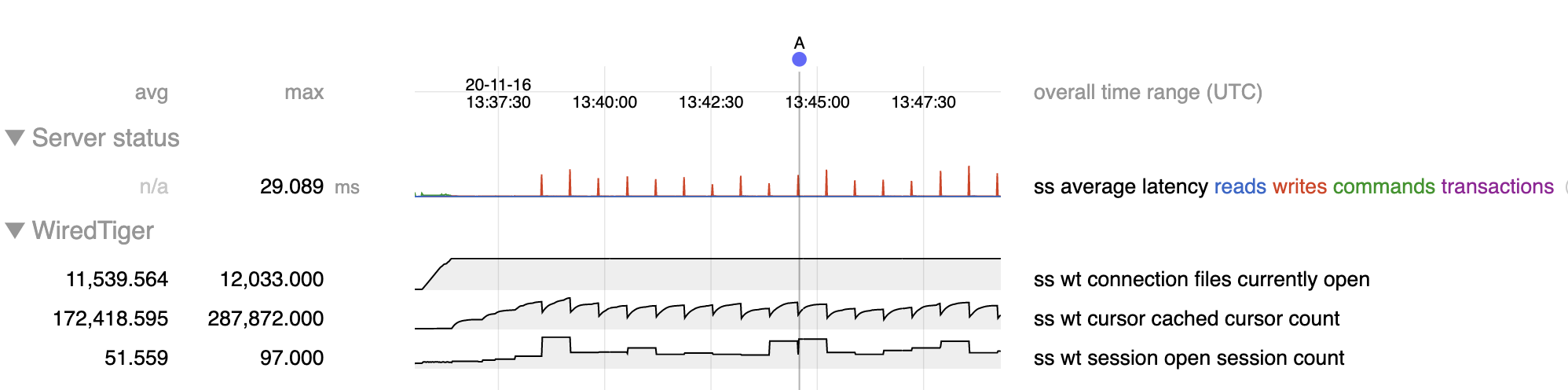

Test creates 1000 collections with 10 secondary indexes each (total 12 k tables) then does inserts at a rate of 1000 per second on 100 threads (10 per second for each thread) against randomly chosen collections. Latency is generally <1 ms but FTDC shows periodic latency spikes of tens of ms:

The spikes coincide with calls to WiredTigerSessionCache::closeExpiredIdleSessions, and the interval between spikes is wiredTigerSessionCloseIdleTimeSecs, which has been set to 30 in the above test. I believe this is what's happening:

- closeExpiredIdleSessions takes a mutex on the WT session cache while closing idle sessions; this delays all operations.

- Because of this up to 100 (number of client threads) operations queue up on the mutex and then proceed concurrently after closeExpiredIdleSessions completes, requiring up to 100 concurrent sessions to complete those operations; after completion those sessions are cached.

- Between expiration intervals operations complete quickly so concurrency is low, so only a small number of the cached sessions are actually used.

- So the next call to closeExpiredIdleSessions after the expiration interval finds that most of the cached sessions are idle, so it closes them while holding the session cache mutex, again delaying up to 100 operations and requiring them to open up to 100 new sessions after closeExpiredIdleSessions finishes closing the 100 old sessions, repeating the cycle.

This causes the pattern of cached cursor count and open sessions that we see in the FTDC above; with the 1-second sample interval of FTDC we only occasionally catch the number of sessions decreasing due to closeExpiredIdleSessions before immediately increasing again, e.g. at A in the chart above.

The magnitude of this effect increases with more tables; perf data shows that closeExpiredIdleSessions spends about 65% of its time in close_cached_cursors and about 25% in __session_discard_dhandle, both of which are proportional to the number of tables.