-

Type:

Bug

-

Resolution: Incomplete

-

Priority:

Major - P3

-

None

-

Affects Version/s: 2.0.2

-

Component/s: Performance

-

None

-

Environment:CentOS release 6.2, Linux bs-linux64.10gen.cc 2.6.21.7-2.ec2.v1.2.fc8xen #1 SMP Fri Nov 20 17:48:28 EST 2009 x86_64 BOOST_LIB_VERSION=1_41

24 GB RAM, Intel(R) Core(TM) i7 CPU 950 @ 3.07GHz, 64bit with 8 cores

-

Linux

disk utilization is low, but the CPU usage is almost 800% when finding documents (yes, we're using indexes on query fields)

we do a lot of searches on indexed fields and sometimes we do a lot of consecutive updates (sometimes on indexed fields) on documents by quering directly individuals _ids

when we do approx 3500 queries/s, we get around 600% CPU usage, and I heard that CPU usage for mongod should never be high.. the server load average stays low (around 0.05)

insert query update delete getmore command flushes mapped vsize res faults locked % idx miss % qr|qw ar|aw netIn netOut conn set repl time

0 3324 0 0 0 2 0 22.2g 26.4g 7.21g 0 0 0 0|0 13|0 384k 121k 65 my_replication M 13:18:08

0 2401 0 0 0 1 0 22.2g 26.4g 7.21g 0 0 0 0|0 0|0 278k 88k 65 my_replication M 13:18:09

0 3354 0 0 0 2 0 22.2g 26.4g 7.21g 0 0 0 0|0 13|0 387k 121k 65 my_replication M 13:18:10

0 3089 0 0 0 1 0 22.2g 26.4g 7.21g 0 0 0 0|0 11|0 358k 112k 64 my_replication M 13:18:11

0 3567 0 0 0 2 0 22.2g 26.4g 7.21g 0 0 0 0|0 10|0 414k 130k 64 my_replication M 13:18:12

0 3603 0 0 0 1 0 22.2g 26.4g 7.21g 0 0 0 0|0 10|0 416k 131k 64 my_replication M 13:18:13

0 3680 0 0 0 2 0 22.2g 26.4g 7.21g 0 0 0 0|0 11|0 424k 134k 64 my_replication M 13:18:14

0 3637 0 0 0 1 0 22.2g 26.4g 7.21g 0 0 0 0|0 7|0 419k 132k 63 my_replication M 13:18:15

0 2635 0 0 0 2 0 22.2g 26.4g 7.21g 0 0 0 0|0 12|0 302k 96k 63 my_replication M 13:18:16

0 3659 0 0 0 1 0 22.2g 26.4g 7.21g 0 0 0 0|0 0|0 420k 133k 63 my_replication M 13:18:17

insert query update delete getmore command flushes mapped vsize res faults locked % idx miss % qr|qw ar|aw netIn netOut conn set repl time

0 3694 0 0 0 2 0 22.2g 26.4g 7.21g 0 0 0 0|0 8|0 423k 134k 63 my_replication M 13:18:18

0 2993 0 0 0 1 0 22.2g 26.4g 7.21g 0 0 0 0|0 4|0 343k 109k 63 my_replication M 13:18:19

0 3516 0 0 0 2 0 22.2g 26.4g 7.21g 0 0 0 0|0 10|0 404k 128k 63 my_replication M 13:18:20

0 3687 0 0 0 1 0 22.2g 26.4g 7.21g 0 0 0 0|0 10|0 423k 134k 63 my_replication M 13:18:22

0 3629 0 0 0 2 0 22.2g 26.4g 7.21g 0 0 0 0|0 7|0 415k 132k 63 my_replication M 13:18:23

0 3622 0 0 0 1 0 22.2g 26.4g 7.21g 0 0 0 0|0 9|0 414k 131k 63 my_replication M 13:18:24

0 3113 0 0 0 2 0 22.2g 26.4g 7.21g 0 0 0 0|0 10|0 356k 113k 62 my_replication M 13:18:25

0 3722 0 0 0 1 0 22.2g 26.4g 7.21g 0 0 0 0|0 9|0 428k 135k 62 my_replication M 13:18:26

0 2870 0 0 0 2 0 22.2g 26.4g 7.21g 0 0 0 0|0 0|0 331k 105k 62 my_replication M 13:18:27

0 3361 0 0 0 16 0 22.2g 26.4g 7.21g 0 0 0 0|0 5|0 386k 128k 62 my_replication M 13:18:28

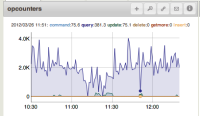

if we then start updating at the same time the queries are running, we get up to around 400 updates/s, but the queries drop to 300/s or even 0/s, as you can see from the MMS graph attached (graph does not show the data from the same timeframe as these logs, but the effect is the same)..

insert query update delete getmore command flushes mapped vsize res faults locked % idx miss % qr|qw ar|aw netIn netOut conn set repl time

0 3 490 0 0 507 0 22.2g 26.4g 7.22g 0 20.7 0 0|0 0|0 152k 65k 51 my_replication M 13:46:50

0 0 493 0 0 495 0 22.2g 26.4g 7.22g 0 21.2 0 0|0 0|0 151k 59k 51 my_replication M 13:46:51

0 0 500 0 0 501 0 22.2g 26.4g 7.22g 0 20.9 0 0|0 0|0 153k 59k 51 my_replication M 13:46:52

0 0 500 0 0 502 0 22.2g 26.4g 7.22g 0 21.2 0 0|0 0|0 153k 60k 51 my_replication M 13:46:53

0 0 485 0 0 486 0 22.2g 26.4g 7.22g 0 20.9 0 0|0 0|0 149k 58k 51 my_replication M 13:46:54

0 0 485 0 0 486 0 22.2g 26.4g 7.22g 0 20.3 0 0|0 0|0 149k 58k 51 my_replication M 13:46:55

0 0 472 0 0 473 0 22.2g 26.4g 7.22g 0 20.2 0 0|0 0|0 145k 56k 51 my_replication M 13:46:56

0 0 481 0 0 484 0 22.2g 26.4g 7.22g 0 20.7 0 0|0 0|0 148k 57k 51 my_replication M 13:46:57

0 0 485 0 0 485 0 22.2g 26.4g 7.22g 0 20.2 0 0|0 0|0 148k 58k 51 my_replication M 13:46:58

0 0 492 0 0 495 0 22.2g 26.4g 7.22g 0 20.6 0 0|0 0|0 151k 59k 51 my_replication M 13:46:59

insert query update delete getmore command flushes mapped vsize res faults locked % idx miss % qr|qw ar|aw netIn netOut conn set repl time

0 0 500 0 0 501 0 22.2g 26.4g 7.22g 0 21 0 0|0 0|0 153k 59k 51 my_replication M 13:47:00

0 0 332 0 0 334 0 22.2g 26.4g 7.22g 0 14 0 0|0 0|0 102k 40k 68 my_replication M 13:47:01

0 2459 203 0 0 203 0 22.2g 26.4g 7.22g 0 6.3 0 3|1 5|1 347k 113k 68 my_replication M 13:47:02

0 3253 216 0 0 218 0 22.2g 26.4g 7.22g 0 5.7 0 2|1 8|1 445k 144k 67 my_replication M 13:47:03

0 2166 142 0 0 143 0 22.2g 26.4g 7.22g 0 3.6 0 0|1 13|1 292k 95k 65 my_replication M 13:47:04

0 3232 207 0 0 210 0 22.2g 26.4g 7.22g 0 5.4 0 0|0 2|0 442k 142k 65 my_replication M 13:47:05

0 3318 200 0 0 201 0 22.2g 26.4g 7.22g 0 5.4 0 0|0 10|0 445k 143k 65 my_replication M 13:47:06

0 3096 250 0 0 252 0 22.2g 26.4g 7.22g 0 6.6 0 2|0 2|1 438k 142k 64 my_replication M 13:47:07

0 91650 540 0 0 555 0 22.2g 26.4g 7.22g 0 6.1 0 0|1 12|1 10m 3m 64 my_replication M 13:47:50

0 3 0 0 0 15 0 22.2g 26.4g 7.22g 0 0.1 0 0|0 0|0 1k 8k 50 my_replication M 13:47:51

insert query update delete getmore command flushes mapped vsize res faults locked % idx miss % qr|qw ar|aw netIn netOut conn set repl time

0 0 0 0 0 2 0 22.2g 26.4g 7.22g 0 0 0 0|0 0|0 215b 1k 50 my_replication M 13:47:52

0 0 0 0 0 1 0 22.2g 26.4g 7.22g 0 0 0 0|0 0|0 62b 1k 50 my_replication M 13:47:53

0 0 0 0 0 2 0 22.2g 26.4g 7.22g 0 0 0 0|0 0|0 215b 1k 50 my_replication M 13:47:54

and for a short time, the HDD gets a higher utilization and the average server load increases to around 2.0

Device: rrqm/s wrqm/s r/s w/s rMB/s wMB/s avgrq-sz avgqu-sz await svctm %util sdb 0.00 0.00 0.16 0.00 0.01 0.00 130.83 0.00 10.00 0.52 0.01 sda 0.11 13.45 0.88 14.86 0.06 0.11 22.25 6.62 420.27 4.14 6.51 md1 0.00 0.00 0.00 0.00 0.00 0.00 7.74 0.00 0.00 0.00 0.00 md0 0.00 0.00 0.00 0.00 0.00 0.00 8.00 0.00 0.00 0.00 0.00 md2 0.00 0.00 0.82 27.96 0.05 0.11 11.32 0.00 0.00 0.00 0.00 Device: rrqm/s wrqm/s r/s w/s rMB/s wMB/s avgrq-sz avgqu-sz await svctm %util sdb 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 sda 0.00 226.00 0.00 303.00 0.00 2.39 16.16 47.37 284.78 3.30 100.00 md1 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 md0 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 md2 0.00 0.00 0.00 426.00 0.00 1.66 7.96 0.00 0.00 0.00 0.00 Device: rrqm/s wrqm/s r/s w/s rMB/s wMB/s avgrq-sz avgqu-sz await svctm %util sdb 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 sda 0.00 546.00 0.00 301.00 0.00 3.22 21.93 75.70 221.28 3.32 100.00 md1 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 md0 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 md2 0.00 0.00 0.00 864.00 0.00 3.37 7.99 0.00 0.00 0.00 0.00 Device: rrqm/s wrqm/s r/s w/s rMB/s wMB/s avgrq-sz avgqu-sz await svctm %util sdb 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 sda 0.00 370.00 0.00 213.00 0.00 2.32 22.27 23.09 151.24 4.48 95.40 md1 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 md0 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 md2 0.00 0.00 0.00 565.00 0.00 2.17 7.86 0.00 0.00 0.00 0.00 Device: rrqm/s wrqm/s r/s w/s rMB/s wMB/s avgrq-sz avgqu-sz await svctm %util sdb 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 sda 0.00 3.00 0.00 7.00 0.00 0.04 11.43 0.10 14.57 14.29 10.00 md1 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 md0 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 md2 0.00 0.00 0.00 8.00 0.00 0.03 8.00 0.00 0.00 0.00 0.00 Device: rrqm/s wrqm/s r/s w/s rMB/s wMB/s avgrq-sz avgqu-sz await svctm %util sdb 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 sda 0.00 0.00 0.00 1.00 0.00 0.00 8.00 0.03 31.00 31.00 3.10 md1 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 md0 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 md2 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 Device: rrqm/s wrqm/s r/s w/s rMB/s wMB/s avgrq-sz avgqu-sz await svctm %util sdb 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 sda 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 md1 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 md0 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 md2 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00

this is the serverStatus():

db.serverStatus()

{

"host" : "###SERVER1###",

"version" : "2.0.2",

"process" : "mongod",

"uptime" : 243038,

"uptimeEstimate" : 238124,

"localTime" : ISODate("2012-03-26T11:56:12.316Z"),

"globalLock" : {

"totalTime" : 243037830469,

"lockTime" : 1148740105,

"ratio" : 0.0047265896950414235,

"currentQueue" : {

"total" : 0,

"readers" : 0,

"writers" : 0

},

"activeClients" : {

"total" : 7,

"readers" : 7,

"writers" : 0

}

},

"mem" : {

"bits" : 64,

"resident" : 7390,

"virtual" : 27029,

"supported" : true,

"mapped" : 22758,

"note" : "virtual minus mapped is large. could indicate a memory leak"

},

"connections" : {

"current" : 63,

"available" : 9537

},

"extra_info" : {

"note" : "fields vary by platform",

"heap_usage_bytes" : 375216,

"page_faults" : 69745

},

"indexCounters" : {

"btree" : {

"accesses" : 160738,

"hits" : 160723,

"misses" : 15,

"resets" : 0,

"missRatio" : 0.00009331956351329492

}

},

"backgroundFlushing" : {

"flushes" : 4037,

"total_ms" : 8630655,

"average_ms" : 2137.8882833787466,

"last_ms" : 7,

"last_finished" : ISODate("2012-03-26T11:56:06.277Z")

},

"cursors" : {

"totalOpen" : 1,

"clientCursors_size" : 1,

"timedOut" : 0,

"totalNoTimeout" : 1

},

"network" : {

"bytesIn" : 65203610293,

"bytesOut" : 71520001080,

"numRequests" : 548532387

},

"repl" : {

"setName" : "my_replication",

"ismaster" : true,

"secondary" : false,

"hosts" : [

"###SERVER1###"

],

"arbiters" : [

"###SERVER2###"

],

"primary" : "###SERVER1###",

"me" : "###SERVER1###"

},

"opcounters" : {

"insert" : 8,

"query" : 541326108,

"update" : 3466412,

"delete" : 0,

"getmore" : 12648,

"command" : 3727281

},

"asserts" : {

"regular" : 0,

"warning" : 0,

"msg" : 0,

"user" : 572,

"rollovers" : 0

},

"writeBacksQueued" : false,

"ok" : 1

}

and this are the collection stats:

db.fdy_products.stats()

{

"ns" : "###my-db###.###my-collection###",

"count" : 848036,

"size" : 7271212740,

"avgObjSize" : 8574.17932729271,

"storageSize" : 9138339840,

"numExtents" : 37,

"nindexes" : 9,

"lastExtentSize" : 1526575104,

"paddingFactor" : 1.0099999999512375,

"flags" : 1,

"totalIndexSize" : 672054896,

"indexSizes" : {

"_id_" : 29507184,

"_index_1_" : 52445184,

"_matching_1_" : 64249856,

"_matching_2_" : 81567744,

"_index_2_" : 57614336,

"_index_3_" : 72572928,

"_index_4" : 156557312,

"_index_5" : 120020992,

"_index_6" : 37519360

},

"ok" : 1

}

during such parallel reads/updates, almost every day we get at least one timeout (after 30s) even when using findOne(

{ _id: ObjectId('...') }).. which in my opinion should never happen

we tried both ways of disabling NUMA:

- just for mongod by adding "numactl --interleave=all " before the mongod command (verified that interleave is being used by checking /proc/#/numa_maps)

- for the whole OS, by adding numa=off to boot settings (verified by checking the messages log, where it says: "kernel: NUMA turned off")

we're accessing the DB through a PHP (5.3.3) driver (mongo/1.2.7), but i don't think it has anything to do with this issue