-

Type:

Bug

-

Resolution: Unresolved

-

Priority:

Major - P3

-

None

-

Affects Version/s: 4.2.0, 4.4.2

-

Component/s: None

-

None

-

Replication

-

ALL

-

Repl 2021-05-31, Repl 2021-06-14, Repl 2021-06-28, Repl 2021-07-12, Repl 2021-07-26, Repl 2021-08-09

-

None

-

0

-

None

-

None

-

None

-

None

-

None

-

None

Parallel insert performance test shows that writes with { w: majority, j: false } perform worse than { w: majority } which enabled journaling by default.

{

"end": 1606114697.135247,

"name": "ParallelInsert-1-Insert_WMajority_JFalse",

"results": {

"1": {

"ops_per_sec": 315.3323923270361,

"ops_per_sec_values": [

315.3323923270361

]

}

},

"start": 1606112991.5566537,

"workload": "genny"

},

...

{

"end": 1606114697.135247,

"name": "ParallelInsert-1-Insert_WMajority",

"results": {

"1": {

"ops_per_sec": 373.07617520622085,

"ops_per_sec_values": [

373.07617520622085

]

}

},

"start": 1606112991.5566537,

"workload": "genny"

},

I found this issue using an update workload in my paper experiments against 4.4.2 in a 5-node setting. It's weird that the latency is about 100ms for some operations even when there's just one client. This seems related to the default storage.journal.commitIntervalMs used by the journal flusher

| throughput | latency_50_in_ms | latency_90_in_ms | latency_99_in_ms | thread_num | iteration | |

| 0 | 33.166667 | 60.167260 | 99.918017 | 100.078770 | 2 | 0 |

| 5 | 266.000000 | 60.109203 | 99.889717 | 100.047515 | 16 | 0 |

| 3 | 1010.766667 | 63.347630 | 101.193805 | 102.650609 | 64 | 0 |

| 2 | 3390.566667 | 75.609942 | 106.122061 | 110.796925 | 256 | 0 |

| 4 | 8377.533333 | 122.405804 | 130.348062 | 140.317615 | 1024 | 0 |

| 1 | 13738.645161 | 144.346179 | 179.020890 | 230.821927 | 2048 | 0 |

After I changed writeConcernMajorityJournalDefault to false, { w: majority } starts to perform as expected.

| throughput | latency_50_in_ms | latency_90_in_ms | latency_99_in_ms | thread_num | iteration | |

| 0 | 1231.566667 | 1.586717 | 2.106353 | 2.762118 | 2 | 0 |

| 3 | 11776.800000 | 5.379346 | 6.699356 | 11.847912 | 64 | 0 |

| 4 | 14283.500000 | 8.906318 | 10.432745 | 13.263393 | 128 | 0 |

| 1 | 14588.533333 | 17.495040 | 22.730308 | 29.815806 | 256 | 0 |

| 2 | 15812.966667 | 32.327074 | 36.412116 | 52.309199 | 512 | 0 |

As a comparison, the default setting with { writeConcernMajorityJournalDefault: true } and { w: majority } gives a lower but comparable throughput as expected.

| throughput | latency_50_in_ms | latency_90_in_ms | latency_99_in_ms | thread_num | iteration | |

| 0 | 1166.633333 | 1.673785 | 2.223570 | 2.948424 | 2 | 0 |

| 5 | 4957.833333 | 3.171073 | 4.634047 | 7.260699 | 16 | 0 |

| 3 | 9701.066667 | 6.537874 | 7.292936 | 49.282507 | 64 | 0 |

| 2 | 12332.733333 | 20.704192 | 25.998145 | 64.597367 | 256 | 0 |

| 4 | 13330.633333 | 76.797501 | 115.536679 | 176.026491 | 1024 | 0 |

| 1 | 15191.266667 | 135.013036 | 169.713326 | 221.682833 | 2048 | 0 |

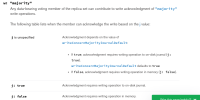

My theory is that the code missed signaling writers when updating the positions because it is conditional on writeConcernMajorityJournalDefault.

- depends on

-

SERVER-86685 Upgrade clusters using j:false with w:majority writes to use j:true

-

- Closed

-

- is related to

-

SERVER-59776 50% regression in single multi-update

-

- Closed

-

-

SERVER-54939 Investigate secondary batching behavior in v4.4

-

- Closed

-

- related to

-

SERVER-64341 W:1 writes with j: false perform worse than j: true with high concurrency

-

- Closed

-