Our SecureAllocator attempts to lock memory pages so that the OS does not page them to disk. In Linux we use mlock, and in Windows we use VirtualLock.

Windows imposes limits on the number of pages that can be locked by VirtualLock. From the documentation:

Each version of Windows has a limit on the maximum number of pages a process can lock. This limit is intentionally small to avoid severe performance degradation. Applications that need to lock larger numbers of pages must first call the SetProcessWorkingSetSize function to increase their minimum and maximum working set sizes.

In SERVER-23705, we started using the SetProcessWorkingSetSize API when we reach these limits.

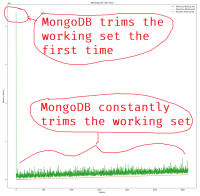

Even when the pagefile is disabled, we have observed strange performance behavior where Windows moves essentially all of the mongod's memory from the Active state to the Inactive state. This is accompanied by very long stalls. In FTDC, the observed effect is that there is no mongod resident memory.

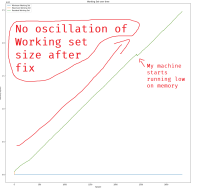

My theory is that SetProcessWorkingSetSize tells the OS that certain memory is important, but by implication that everything outside of the working set is not actually important. As a result of setting the working set to a small value in order to lock pages, the also OS decides to mark the remaining resident memory as "Inactive" even if there is plenty of free memory available in the system. This has serious performance implications that we should investigate and understand.

It seems like we either need to disprove this theory, find a different API, or use always use SetProcessWorkingSetSize to ensure that the entire process's memory is important.

- is related to

-

SERVER-74289 Add statistics for secure allocator to server status

-

- Closed

-

- related to

-

SERVER-23705 Number of databases on Windows is limited when using on-disk encryption

-

- Closed

-