-

Type:

Task

-

Resolution: Unresolved

-

Priority:

Minor - P4

-

None

-

Affects Version/s: None

-

Component/s: None

-

Atlas Streams

-

Sprint 55

-

None

-

None

-

None

-

None

-

None

-

None

-

None

this is the pipeline i used in the test:

https://github.com/10gen/mongohouse/blob/master/testdata/bin/streams-smoke/utils/pipelines.go#L103

this is what the input docs look like:

https://github.com/10gen/mongohouse/blob/master/testdata/bin/streams-smoke/utils/pipelines.go#L194

this is where the number of docs to insert are calculated, based on MBSTATEPERWINDOW of 4000:

https://github.com/10gen/mongohouse/blob/master/testdata/bin/streams-smoke/smoke_test.go#L308

When I tested this, the memory tracker reported the expected memory usage when the window was open (about 4GB), then hit “out of memory” on SP30 when closing the window. Note it’s a clean memory tracker error, not an OS OOM. So we might not have to fix anything in the short term.

But it would be interesting to understand why the window close causes such a large spike in the memory consumption.

===

See https://docs.google.com/document/d/1zPurErldtRGkl9COOM8jv_R_SAkfn4KW2Zd0temb71I/edit

=====

In this PR, I added a smoketest that creates a 6GB window.

Things work fine when the window is open, and the SP reports the expected stateSize. When the window is closed, the SP runs into "Stream processing instance out of memory".

We should understand where this memory spike comes from, it doesn't seem like it should be happening. We can likely re-create this using jstests against a local engine build. We can also repro this in the service environment using the smoke test I added.

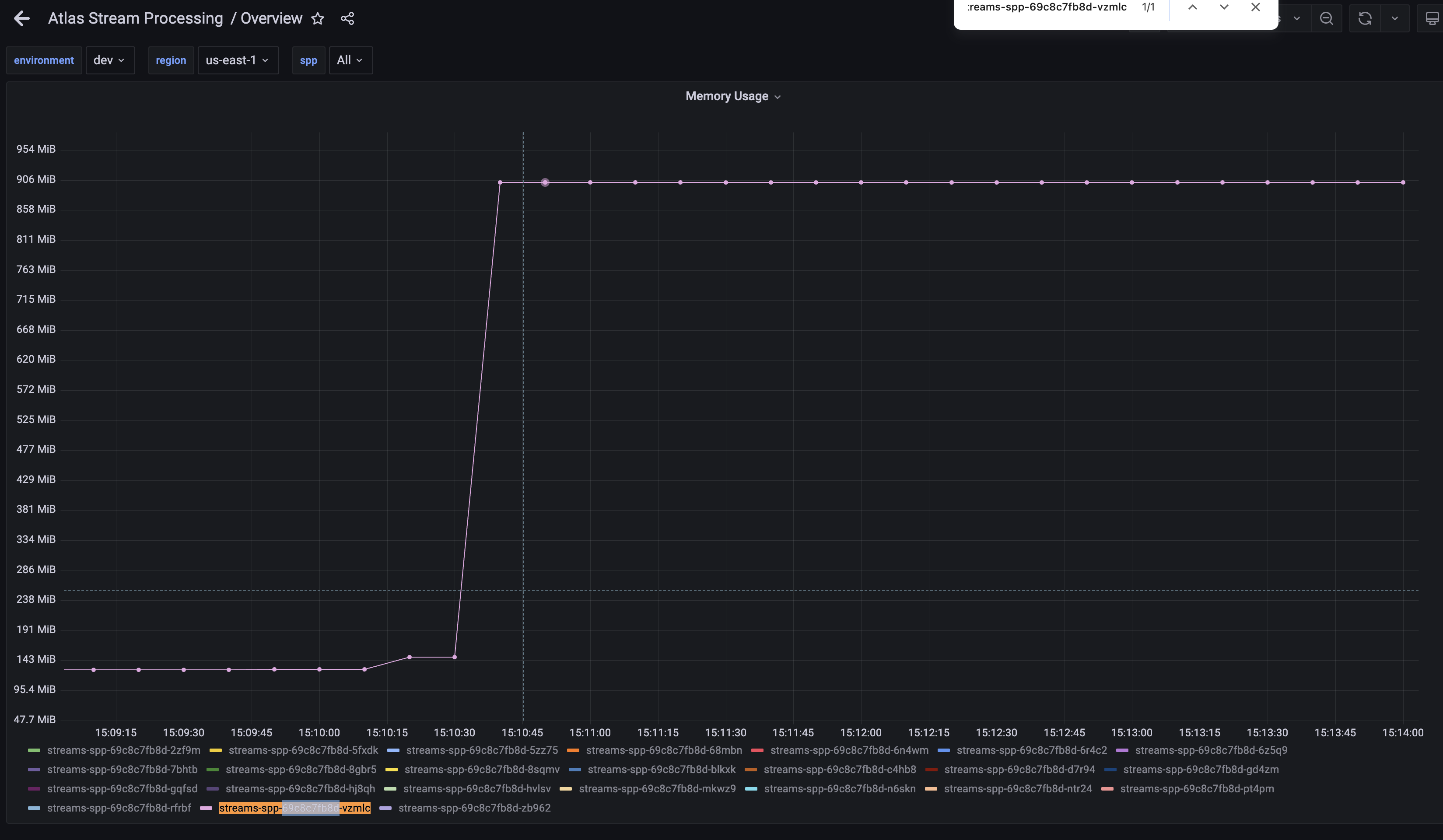

Another odd thing is the Grafana/Prom reported memory consumption and the state size seem to disagree when the "6GB" window is open. The SP state size reports 6GB, Grafana reports ~1GB.

AtlasStreamProcessing> sp.local_immortal_smoke_test_c075d2ba_e26e6f6f.stats()

{

ok: 1,

ns: '651f3b1e28508c679f051ee5.651efa6e04b6d81727d5fa9f.local_immortal_smoke_test_c075d2ba_e26e6f6f',

stats:

,

- is duplicated by

-

SERVER-84230 Streams: investigate word count query that OOMs pod

-

- Closed

-