-

Type:

Bug

-

Resolution: Done

-

Priority:

Major - P3

-

Affects Version/s: None

-

Component/s: mongoreplay

-

None

-

Platforms 2016-11-21, Platforms 2017-01-23

-

v3.4

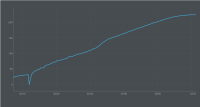

While replaying a record, we encountered the maximum number of TCP connection on our server (64k). It seems that the number of connection has been continuously increasing since the begining of the replay:

After investigation, it seems that a new mongo client is created for each Source -> Destination, in our record there seems to be many open / close connection, (thanks to the ASIO driver, this is expected between a mongos and mongod), thus the number of connection pool is continuously increasing over time even if they are not used (as the recorded connection has actually been destroyed). We encounter this (non-critical for us) problem with the record provided with TOOLS-1553

You can reproduce this behavior by opening/closing connections while recording:

i = 0

db.test.insert({dummy: true})

while (true) {

if (! (i % 1000)) {

db2 = new Mongo("mongodb://localhost:27017")

}

db2.getDB("test").getCollection("test").find().limit(10).toArray()

i = i + 1

}

While recording, mongostat provide:

insert query update delete getmore command dirty used flushes vsize res qrw arw net_in net_out conn set repl time

*0 3638 *0 *0 0 7|0 0.0% 0.6% 0 2.64G 145M 0|0 0|0 340k 31.7m 5 test PRI Nov 23 15:33:45.033

*0 3509 *0 *0 0 10|0 0.0% 0.6% 0 2.64G 145M 0|0 0|0 328k 30.6m 5 test PRI Nov 23 15:33:46.035

*0 3519 *0 *0 0 8|0 0.0% 0.6% 0 2.64G 145M 0|0 0|0 328k 30.7m 5 test PRI Nov 23 15:33:47.033

*0 3599 *0 *0 0 10|0 0.0% 0.6% 0 2.64G 145M 0|0 0|0 336k 31.4m 6 test PRI Nov 23 15:33:48.034

*0 3600 *0 *0 0 9|0 0.0% 0.6% 0 2.64G 145M 0|0 0|0 336k 31.4m 7 test PRI Nov 23 15:33:49.035

*0 3586 *0 *0 0 11|0 0.0% 0.6% 0 2.64G 145M 0|0 0|0 335k 31.2m 5 test PRI Nov 23 15:33:50.034

*0 3419 *0 *0 0 8|0 0.0% 0.6% 0 2.64G 145M 0|0 1|0 319k 29.8m 5 test PRI Nov 23 15:33:51.032

*0 3144 *0 *0 0 10|0 0.0% 0.6% 0 2.64G 145M 0|0 1|0 294k 27.4m 6 test PRI Nov 23 15:33:52.035

*0 3300 *0 *0 0 8|0 0.0% 0.6% 0 2.64G 145M 0|0 0|0 308k 28.8m 6 test PRI Nov 23 15:33:53.032

*0 3322 *0 *0 0 8|0 0.0% 0.6% 0 2.64G 145M 0|0 0|0 310k 29.0m 6 test PRI Nov 23 15:33:54.035

while replaying, the number of connection increase:

insert query update delete getmore command dirty used flushes vsize res qrw arw net_in net_out conn set repl time

*0 3527 *0 *0 0 54|0 0.0% 0.6% 0 2.71G 80.0M 0|0 0|0 331k 30.8m 133 test PRI Nov 23 15:55:10.533

*0 3205 *0 *0 0 36|0 0.0% 0.6% 0 2.71G 80.0M 0|0 0|0 300k 28.0m 140 test PRI Nov 23 15:55:11.532

*0 3517 *0 *0 0 34|0 0.0% 0.6% 0 2.71G 80.0M 0|0 0|0 329k 30.7m 146 test PRI Nov 23 15:55:12.532

*0 3596 *0 *0 0 40|0 0.0% 0.6% 0 2.72G 81.0M 0|0 0|0 337k 31.4m 154 test PRI Nov 23 15:55:13.533

*0 3444 *0 *0 0 35|0 0.0% 0.6% 0 2.72G 81.0M 0|0 0|0 322k 30.1m 161 test PRI Nov 23 15:55:14.532

*0 3637 *0 *0 0 62|0 0.0% 0.6% 0 2.73G 81.0M 0|0 0|0 342k 31.8m 169 test PRI Nov 23 15:55:15.537

*0 3511 *0 *0 0 41|0 0.0% 0.6% 0 2.73G 81.0M 1|0 0|0 329k 30.6m 177 test PRI Nov 23 15:55:16.539

*0 3520 *0 *0 0 37|0 0.0% 0.6% 0 2.73G 81.0M 0|0 0|0 330k 30.7m 183 test PRI Nov 23 15:55:17.533

*0 3570 *0 *0 0 40|0 0.0% 0.6% 0 2.74G 81.0M 0|0 0|0 335k 31.2m 190 test PRI Nov 23 15:55:18.533

*0 3610 *0 *0 0 36|0 0.0% 0.6% 0 2.74G 81.0M 0|0 0|0 338k 31.5m 196 test PRI Nov 23 15:55:19.532

- related to

-

TOOLS-1553 PreProcessing is failling with "got invalid document size"

-

- Closed

-