-

Type:

Improvement

-

Resolution: Done

-

Priority:

Major - P3

-

Affects Version/s: None

-

Component/s: None

-

None

-

Storage Engines

I've been running a workload that does random inserts. The keys are 24 bytes the values are 225 bytes.

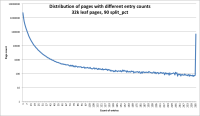

The page size is 4k. After running for a while I end up with a ~7GB database. The distribution of key/value pairs on the disk pages is:

| Page count | Number of pairs |

|---|---|

| 4530 | 1 |

| 4609 | 2 |

| 4018 | 3 |

| 3810 | 4 |

| 159912 | 5 |

| 112646 | 6 |

| 68976 | 7 |

| 42168 | 8 |

| 27469 | 9 |

| 19701 | 10 |

| 260192 | 11 |

| 123105 | 12 |

| 115519 | 13 |

| 108806 | 14 |

| 99244 | 15 |

That says to me: The page can fit up to 15 keys on it. There are 1.1 million leaf pages in total and 290 thousand of those have less than half the possible keys.

Ideally all of the pages would be at least half full. If we create pages with a small number of entries they widen the span of the tree, and are relatively less likely to be read back in to have content added in the future (i.e: they are likely to waste space indefinitely).

There are also a lot of pages that are very full - which is bad in this workload. Since the workload is evicting aggressively, those pages are being read in, updated and split into two unequal pages.

- is depended on by

-

SERVER-22388 WiredTiger changes for MongoDB 3.3.2

-

- Closed

-

-

SERVER-22570 WiredTiger changes for MongoDB 3.2.4

-

- Closed

-

- is related to

-

SERVER-22898 High fragmentation on WiredTiger databases under write workloads

-

- Closed

-

- related to

-

WT-2366 Extend wtperf to support updates that grow the record size

-

- Closed

-