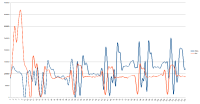

For the given workload wiredtiger/develop achieved 6 times lesser update ops/s than mongodb-3.2.1.

Run summary with develop:

Executed 0 read operations (0%) 0 ops/sec

Executed 0 insert operations (0%) 0 ops/sec

Executed 0 truncate operations (0%) 0 ops/sec

Executed 11374430 update operations (100%) 18957 ops/sec

Executed 5 checkpoint operations

Run summary with mongodb-3.2.1:

Executed 0 read operations (0%) 0 ops/sec

Executed 0 insert operations (0%) 0 ops/sec

Executed 0 truncate operations (0%) 0 ops/sec

Executed 67828924 update operations (100%) 113048 ops/sec

Executed 6 checkpoint operations

Workload:

# wtperf options file: Stress checkpoint data volume conn_config="cache_size=10GB,log=(enabled=false),statistics=(fast),statistics_log=(json=1,wait=1)" table_config="leaf_page_max=32k,internal_page_max=16k,allocation_size=4k,split_pct=90,type=file" # Enough data to fill the cache. 100 million 1k records results in two ~6GB # tables icount=100000000 create=false compression="snappy" populate_threads=1 checkpoint_interval=60 checkpoint_threads=1 report_interval=5 run_time=600 # MongoDB always has multiple tables, and checkpoints behave differently when # there is more than a single table. table_count=2 threads=((count=6,updates=1)) value_sz=1000

- related to

-

WT-2389 Spread Out I/O Rather Than Spiking During Checkpoints

-

- Closed

-