-

Type:

Improvement

-

Resolution: Unresolved

-

Priority:

Major - P3

-

None

-

Affects Version/s: None

-

Component/s: None

-

None

-

13

External user Moditha wrote to us asking a few queries and possible improvements to eviction slot calculations. I have created this ticket to track the work required to handle any investigations and improvements. Following is the query from the user:

Hi,

I have been analysing the cache behaviour of WiredTiger in MongoDB for some time and I was testing Mongo v4.2 recently and found something that I find strange. From my understanding of the queue of the cache. There are these two parameters

#define WT_EVICT_WALK_BASE 300 /* Pages tracked across file visits */ #define WT_EVICT_WALK_INCR 100 /* Pages added each walk */

In my understanding we keep track of 300 pages and add 100 each walk and discard the pages beyond 300 after sorting.

This is the calculation for the target pages for a given b-tree per walk in evict_lru.c.

btree_inuse = __wt_btree_bytes_evictable(session); cache_inuse = __wt_cache_bytes_inuse(cache); bytes_per_slot = 1 + cache_inuse / cache->evict_slots; target_pages_clean = (uint32_t)((btree_inuse + bytes_per_slot / 2) / bytes_per_slot);

And the evict slots are defined as follows in conn_cache.c

cache->evict_slots = WT_EVICT_WALK_BASE + WT_EVICT_WALK_INCR;

So cache->evict_slots is calculated as a proportion out of 400 where we are only adding 100 pages. Imagine a situation where there are 2 trees and the target pages are 310 and 90 respectively. if the walk is done on the first tree it will only take 100 pages out of the 310 it is suppose to pick and 0 from the second tree as we have filled the 100 remaining slots. if the second tree comes first it will pick 90 from it and only 10 from the first tree. At the end the second tree (smaller ones) will evict more.

Why would you define a queue with 400 slots to fill only 100?

Also, why would you overdimension the walks computing their sizes based on the 400, if 3/4 of them are not going to fit in the queue?

Shouldn't the line be

bytes_per_slot = 1 + cache_inuse /WT_EVICT_WALK_INCR ?

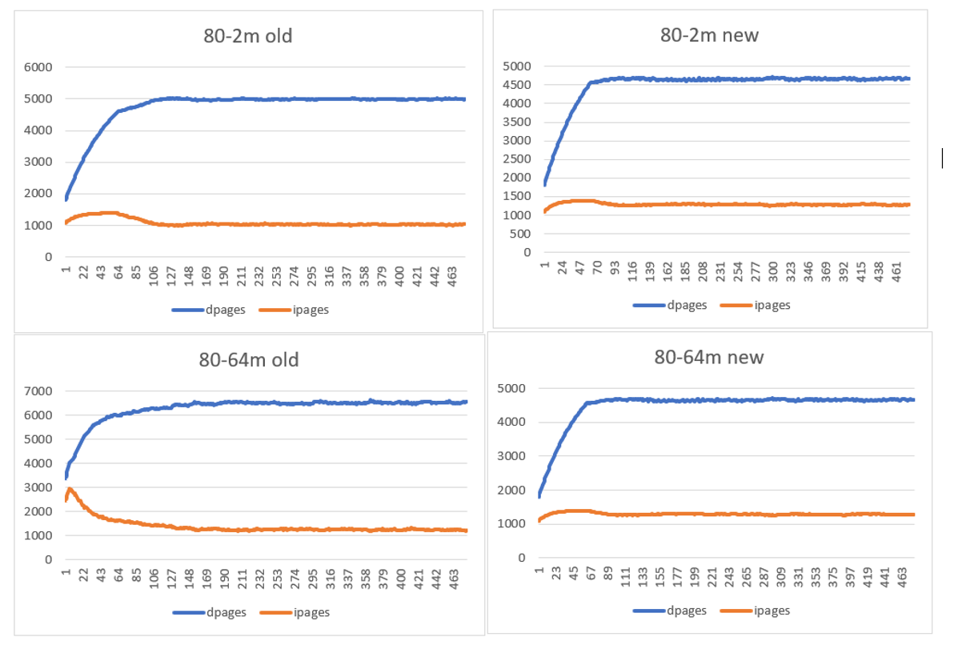

I did this change and here is a comparison of what i got. As you can see the index is penalized in the original code where as in the changed code the values seems to be more stable. Maybe I am missing something correct me if I am wrong.

Thank you,

Regards,

Moditha

- is related to

-

WT-4802 Enable and improve random dhandle selection and eviction target calculations

-

- Backlog

-