-

Type:

Bug

-

Resolution: Done

-

Priority:

Major - P3

-

None

-

Affects Version/s: 4.4.1

-

Component/s: None

-

Storage - Ra 2021-05-31, Storage - Ra 2021-06-14

-

8

I see slower performance when deleting documents after growing the history store. I don't know to what extent this behavior is expected/unexpected.

This came up in the eMRCf testing described at WT-6776.

Setup: I start with 1 million smallish (50 - 200 byte) documents in a single collection on a PSA replica set. After creating the documents, I shutdown the secondary. Because I am using the default enableMajorityReadConcern=true, this means that the primary stops advancing the stable timestamp.

I compare two different scenarios starting from this setup.

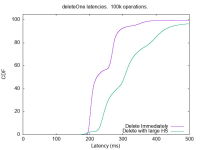

- Immediately delete 100,000 randomly chosen documents (1/10 of collection)

- Perform 8 million updates, 1 million inserts, 1 million deletes, keeping the collection size at 1 million documents, but growing the history store to 3.6 GB. Leave the system idle for 5 minutes so any delayed activity or checkpoints can quiesce. Then delete 100,000 documents at random, as in the first case.

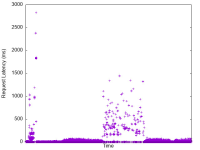

In both cases Genny reports the latency of each delete operation. In the second case, the deletes take noticeably longer to complete. I'll add the data in a comment.

Note that this workload is being driven through MongoDB. So each "delete" here is a deleteOne request where we look up a randomly chosen key and delete the oldest document with the key. The collection is indexed on the key field. So the "delete" time here includes the lookup and deleting both the item and the index entries that point to it. And, of course, since the stable timestamp isn't advancing, some (all?) of these deleted values get pushed into the history store.

I ran these tests with MongoDB 4.4.1.