-

Type:

Task

-

Resolution: Done

-

Priority:

Major - P3

-

Affects Version/s: None

-

Component/s: None

-

None

-

13

One of the goals of durable history was to enable MongoDB to sustain a "normal" workload, while running a point-in-time scan for 24 hours.

We should craft a test against MongoDB that does that and run it to ensure we have met the goal.

The workload should:

- Populate a database with 5 collections, in the order of 50GB of data spread across those collections.

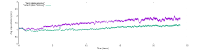

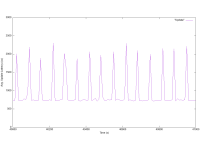

- Sustain a rate of new inserts and an equivalent rate of deletes. Update existing documents. The rate of these operations should remain within the capacity of the provisioned system. I recommend 100 inserts/deletes per second, and 100 updates.

- Run the test on a 3 node replica set.

- Update the MongoDB configuration settings to allow for a point-in-time scan that runs for a day (talk to alex.cameron to find out the options needed to do that).

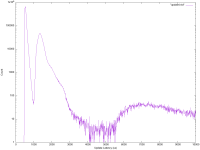

- Implement a scan query that runs for a day, it should be rate limited, and cycle through reading the dataset. Probably at ~10 operations/second.

Talk to brian.lane about how to determine whether the workload is a success, but my recommendation is to check for stable throughput at the expected rate, along with no throughput stalls.