-

Type:

Bug

-

Resolution: Done

-

Priority:

Major - P3

-

None

-

Affects Version/s: WT11.0.0

-

Component/s: Btree, Cache and Eviction

-

Environment:Run on Ubuntu 20, Linux kernel version 5.11. Database file is stored on an Samsung 970 pro 1TB SSD.

-

Storage Engines

-

c(3x10^8)-StorEng - 2023-11-14, 2023-11-28 - Anthill Tiger, 2023-12-12 - Heisenbug, 2024-01-09 - I Grew Tired, StorEng - 2024-01-23, 2024-02-06 tapioooooooooooooca, 2024-02-20_A_near-death_puffin, 2024-03-05 - Claronald, 2024-03-19 - PacificOcean

-

8

Summary

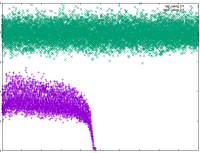

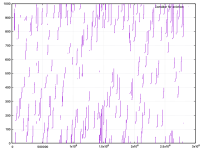

The eviction server thread, when exiting, will clear btree->evict_ref, which records the current point in traversing this btree, by calling __evict_clear_walk() when finishing this eviction run. This means the next background eviction thread would traverse the btree from the beginning, and if it finishes before scanning through the whole tree, the next one would again start from the beginning and parts of the tree would never be scanned for eviction.

This means the current eviction mechanism cannot adapt to certain access patterns in certain (but common) conditions and fail to decide hotness of data correctly and evict hot data from cache. Workloads focusing on former parts of the tree would be much slower than those focusing on latter parts because former parts are always scanned and evicted from cache while latter parts are persistent in cache.

Reproduction and Consequence

This issue occur in the following setup:

- I write an application that uses WiredTiger directly.

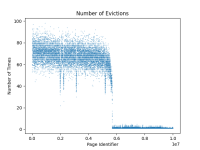

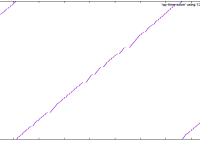

- The database is using a single B-tree, sequentially filling 10,000,000 key value pairs of (16 + 72) bytes resulting in a ~1G uncompressed database size. With compression enabled (snappy compression), the on-disk file size is ~500M.

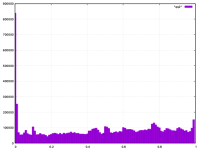

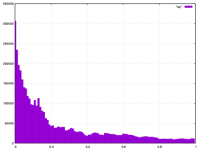

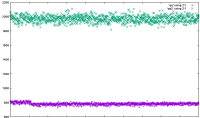

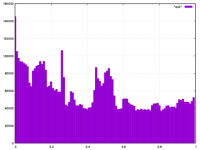

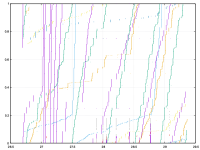

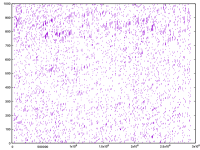

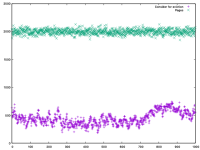

- Total available memory for the application is 1G, and the cache size of WiredTiger is set to 820000KB (but actually only ~700M is used by the application; this may be another issue.) The workload runs 2,000,000 get requests in a single thread, either uniformly random, or focusing (>80% of all requests) on the first half of keys, or focusing on the second half. The total latency differs a lot (see the table below). The reason is described as above, that the first half pages in the tree are always chosen for eviction regardless of the workload.

- Most parameters including those related to eviction, except for the cache size, are default.

I think any read workload with a single large btree and mild cache pressure (so the eviction thread finishes working before scanning through the whole tree) and penalizes cache miss heavily (e.g. by decompression cost) would reproduce similar results.

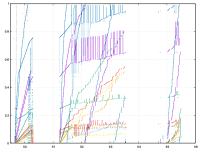

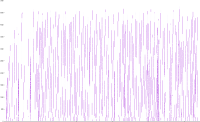

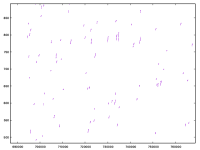

I have modified the code and verified that only the first half pages in the tree are frequently evicted from the cache regardless of the access pattern.

This behavior is checked to be true on WiredTiger version 10.0.0 (the current master branch) and 10.0.2 (the current develop branch)

| Workload | Focusing on first half | Uniform Random | Focusing on second half |

|---|---|---|---|

| Latency | 47s | 36s | 14s |

- related to

-

WT-11300 Investigation: Failure to Queue Pages with Many Updates in Eviction Process

-

- Closed

-