-

Type:

Bug

-

Resolution: Done

-

Priority:

Critical - P2

-

Affects Version/s: 3.0.6, 3.1.7

-

Component/s: WiredTiger

-

None

-

Fully Compatible

-

ALL

-

None

-

0

-

None

-

None

-

None

-

None

-

None

-

None

- test inserts 100 kB documents into a single initially empty collection from multiple client threads

- 24 cpus (12 cores * 2 hyperthreads), 64 GB memory, SSD storage

- standalone mongod (so no oplog); no journal; checkpoints disabled to reduce variability

- mongod restarted between each test for repeatability

Following graph shows 5 successive runs (with mongod restart between each run) at 1, 2, 4, 8 and 16 client threads:

- aggregate throughput declines as number of client threads increases

- disk is mostly idle and becomes more so as op rate declines

- cpu is mostly idle at all thread counts

- eviction activity increases as client threads increase

- yet bytes in cache also grows as thread count increases - eviction is somehow unable to keep up with the (increasingly meager) insertion rate?

- memory allocations increase dramatically in spite of smaller insertion rate

- threads appear to be blocked trying to acquire pages, probably accounting for lower insertion rate

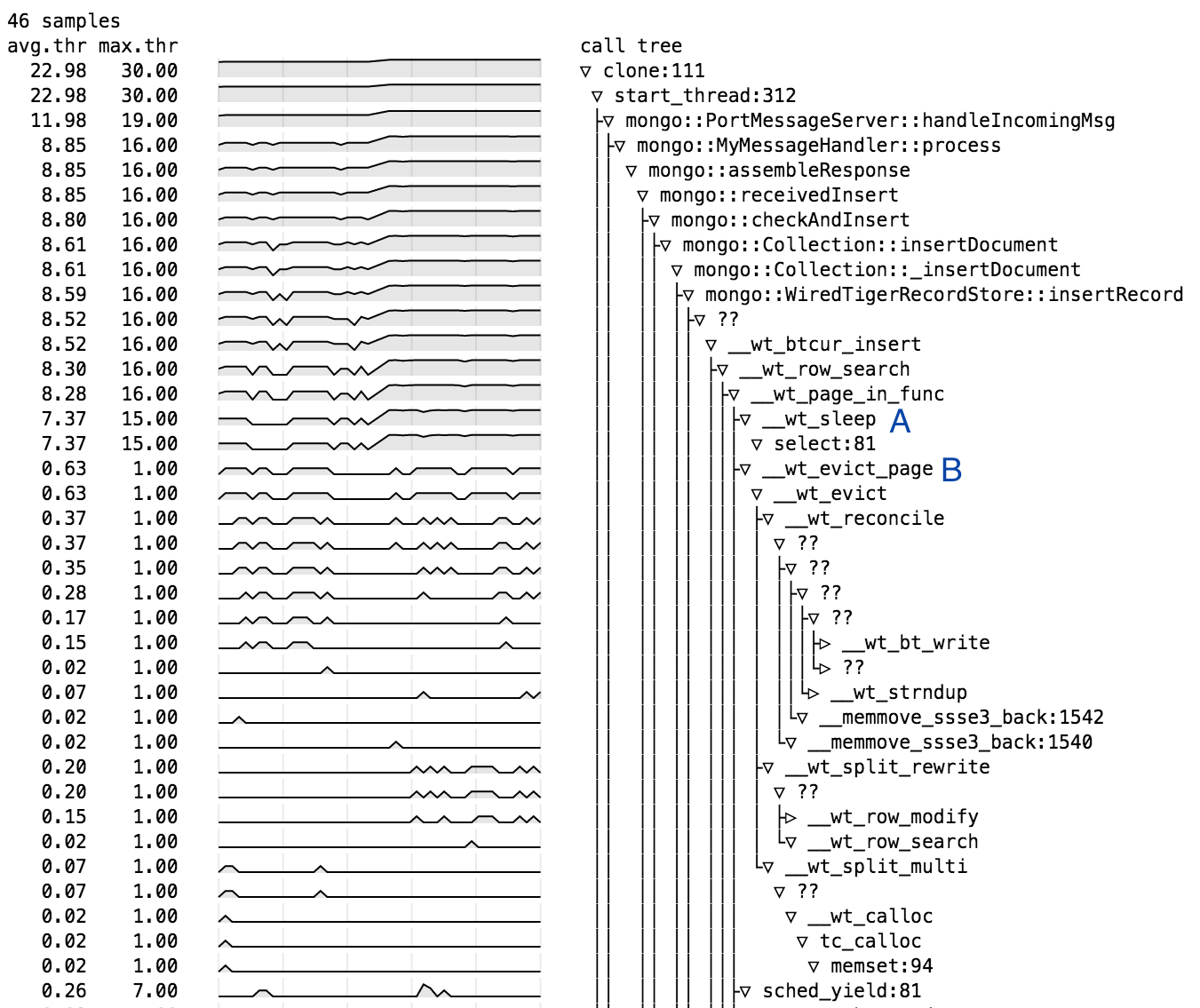

Following gdbmon profile showing two consecutive runs with 2 and 16 threads respectively:

In the second half of the timeline, showing the run with 16 client threads,

- A - much of the time many or most of the 16 threads are waiting (sleeping)

- B - while one thread is evicting

- is depended on by

-

SERVER-21442 WiredTiger changes for MongoDB 3.0.8

-

- Closed

-

-

WT-1973 MongoDB changes for WiredTiger 2.7.0

-

- Closed

-

- related to

-

SERVER-20409 Negative scaling with more than 10K connections

-

- Closed

-

- links to